What You Really Want Are Intelligent Apps (Part 2: Automating AI Foundry)

Welcome back to Stay In Sync a monthly (maybe one day bi-weekly? weekly?) blog where the premise is simple: I share information that I wish I had before embarking on a project. We’re picking up from last time where we’re talking about building production-ready intelligent applications (if you missed last months post - here you go (link)). We’re going to look at how to leverage Terraform to automate the deployment of AI Foundry. We’ll also talk about the individual components on the Azure side and on the Terraform side that make this come together.

What’s AI Foundry

In Microsoft’s own words, AI Foundry is a unified Azure platform-as-a-service offering for enterprise AI operations, model builders, and application development. In my words, it’s a collection of various services that have existed throughout the years that have now been rebranded under an AI friendly umbrella. For this project, we’ll be taking a closer look at the following resources in particular:

AI Foundry Project

AI Search

OpenAI Deployment

Agents

AI Foundry Project: Think of this as your AI workspace's home base - it's the container that holds all your AI experiments, models, and configurations in one organized place. It's like having a dedicated project folder, but one that actually knows how to manage AI workloads and keeps track of all your model versions, datasets, and collaborative work.

Now here's where Microsoft's naming gets fun - there are actually two completely different architectures both called "AI Foundry Projects." Hub-based projects live under AI Hubs (organizational containers for billing/security) and are reskinned Azure Machine Learning instances with access to 11,000+ models. Foundry Projects use the Cognitive Services provider instead - you get ~100 Microsoft-hosted models, but OpenAI deployments live directly in your AI Services account.

Think "bring your own compute" vs "Microsoft hosts everything." We're automating the newer Foundry Projects approach today.

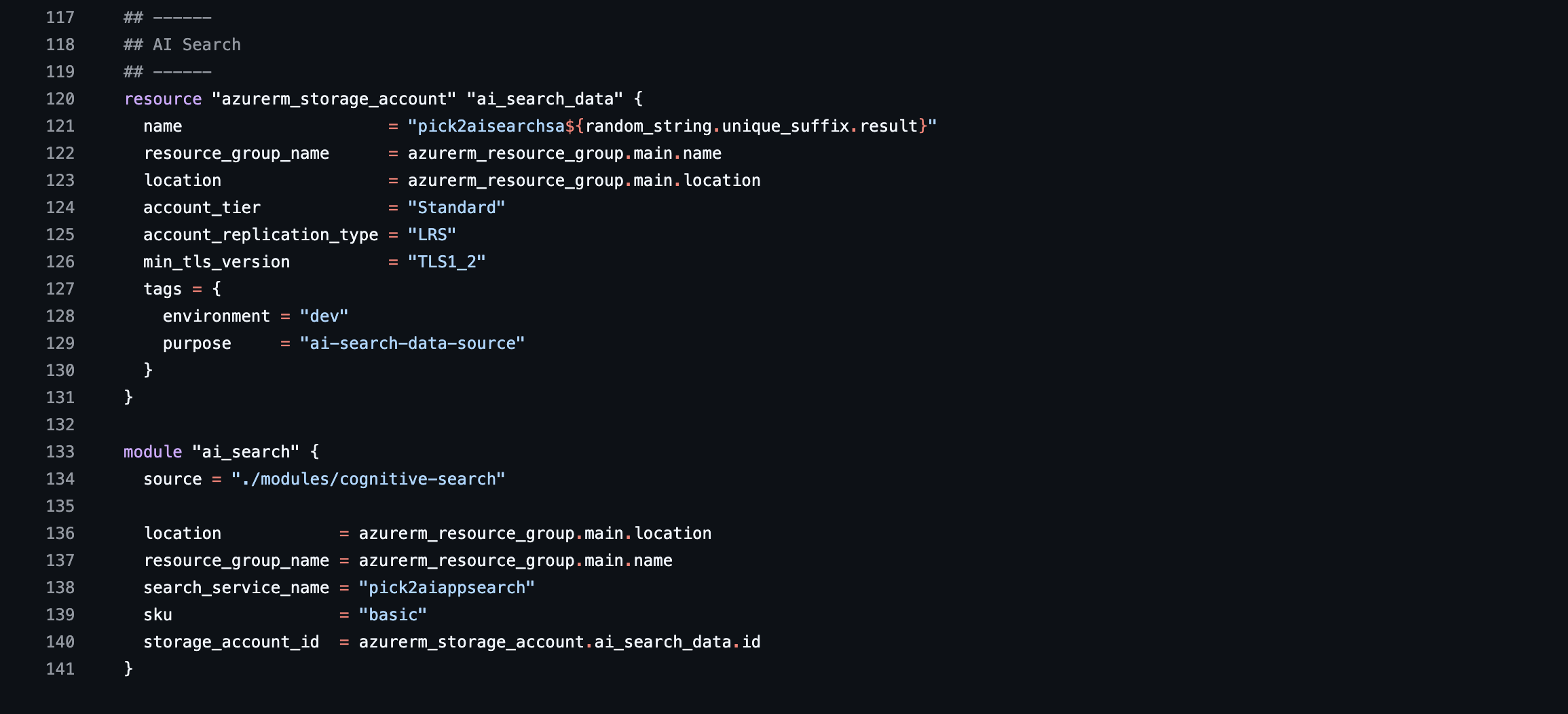

AI Search: This is Microsoft's rebrand of what used to be Azure Cognitive Search (see what I mean about the umbrella rebrand?). It's your intelligent search engine that doesn't just find text matches but actually understands context and meaning. Perfect for building those "smart search" features that make users think your app can read their minds.

OpenAI Services: Your gateway to the GPT models everyone's talking about. This service gives you API access to OpenAI's language models (GPT-4, GPT-3.5, etc.) but with enterprise-grade security and compliance. It's like having ChatGPT's brain, but one that plays nice with corporate IT policies.

Deployments: These are your live, running model instances - basically where your AI models go from "cool experiment" to "actual production service that customers use." Think of them as the bridge between your trained models and the real world, complete with scaling, monitoring, and all that production-ready goodness.

Agents: The new kid on the block that lets you build AI assistants that can actually do things, not just chat. These can call functions, use tools, and chain together multiple AI operations to accomplish complex tasks. It's like giving your AI a set of hands to interact with your applications and data.

The Challenge of Automating These Resources

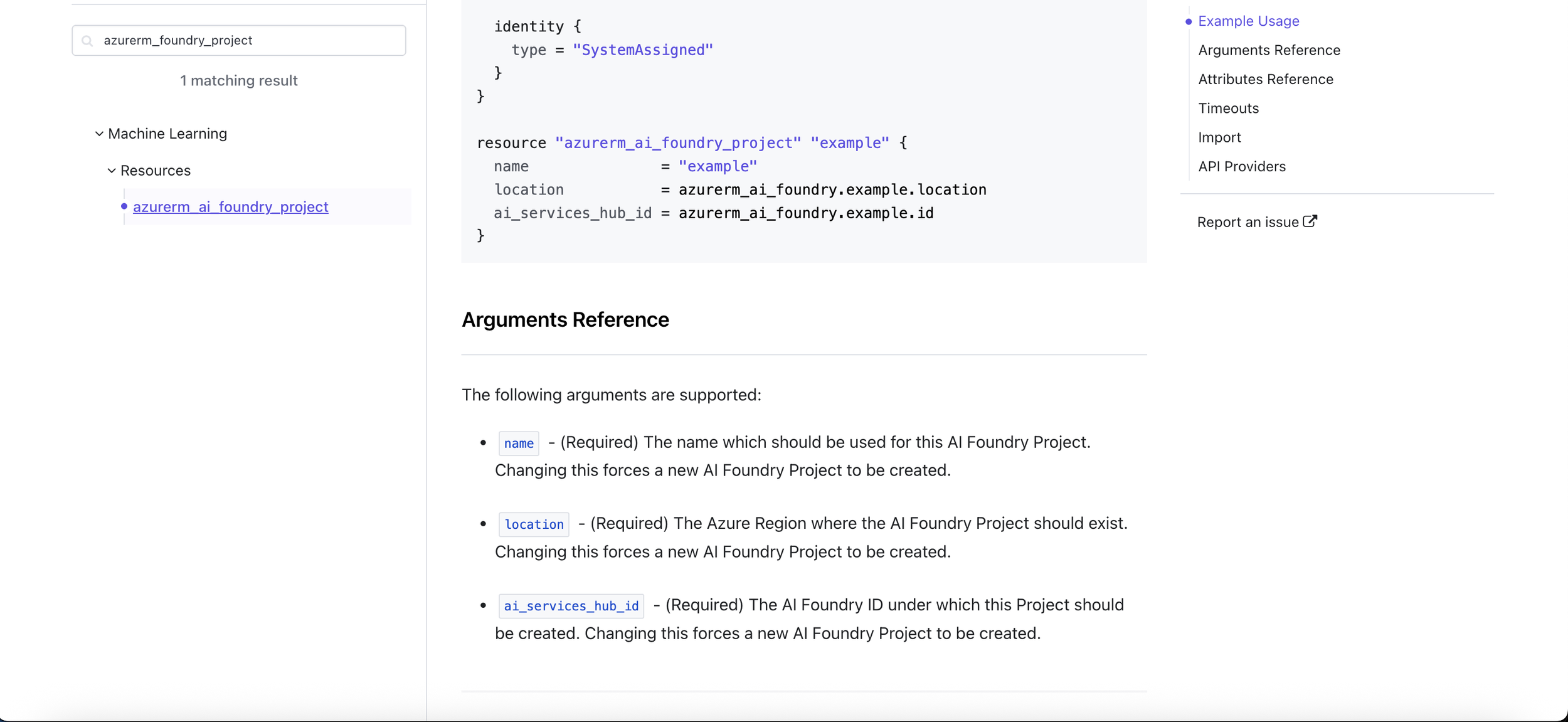

The main challenge in creating Terraform definitions for all of these resources are that all of them don’t exist yet. This stuff is all happening really fast (you could argue that some of this is preview software, but I won’t go down that rabbit hole), so sometimes the open-source community hasn’t made all of the resources yet. For example, if you want to provision a regular AI Foundry Project (NOT a Hub Project), there’s not currently a resource for that. So we leverage the AZ API Resource:

(This actually makes a hub project! Looks like a fix is on the way: Issue #29956)

The Solution: Custom Modules

To manage this, I made custom modules so that I can figure this stuff out once and have a good baseline to build on top of as the infrastructure continues to evolve. These are the modules that we’ll highlight in this blog:

ai-hub: This module provisions the full AI Hub architecture with all the underlying infrastructure - the Hub itself, a connected Project, AI Services account, storage, and Key Vault with proper role assignments. It's your one-stop shop for getting the "bring your own compute" AML-based setup running with all the necessary plumbing connected. Perfect when you need access to those 11,000+ models and want everything properly wired together from day one.

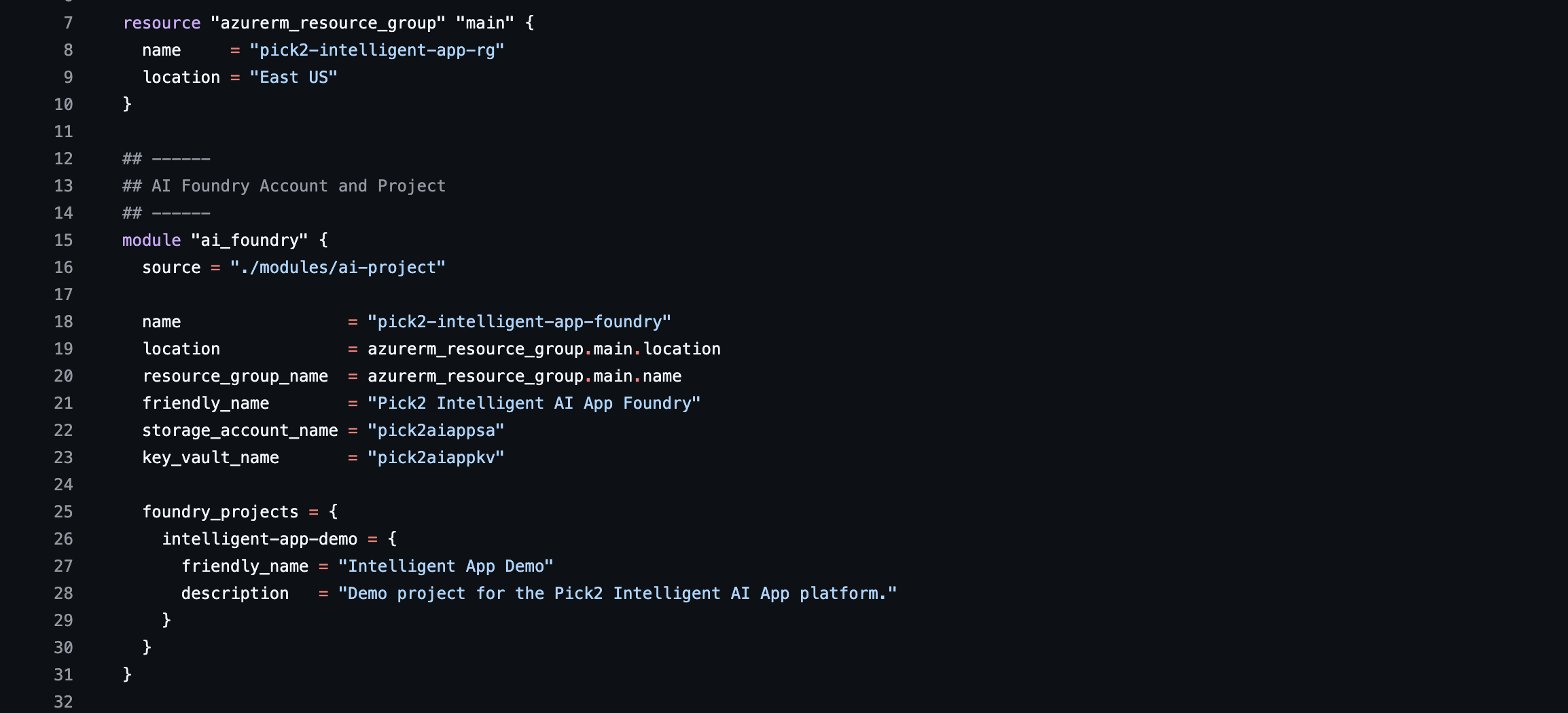

ai-project: This module creates the newer Cognitive Services-based AI Foundry setup, using the AzAPI provider to handle the parts that don't have native Terraform resources yet. It provisions an AI Services account, enables project management on it, then creates one or more Foundry Projects as sub-resources - giving you that streamlined "Microsoft hosts everything" approach with OpenAI models deployable directly to the account. This is the future-forward option that Microsoft is pushing, even if it means dealing with some preview APIs.

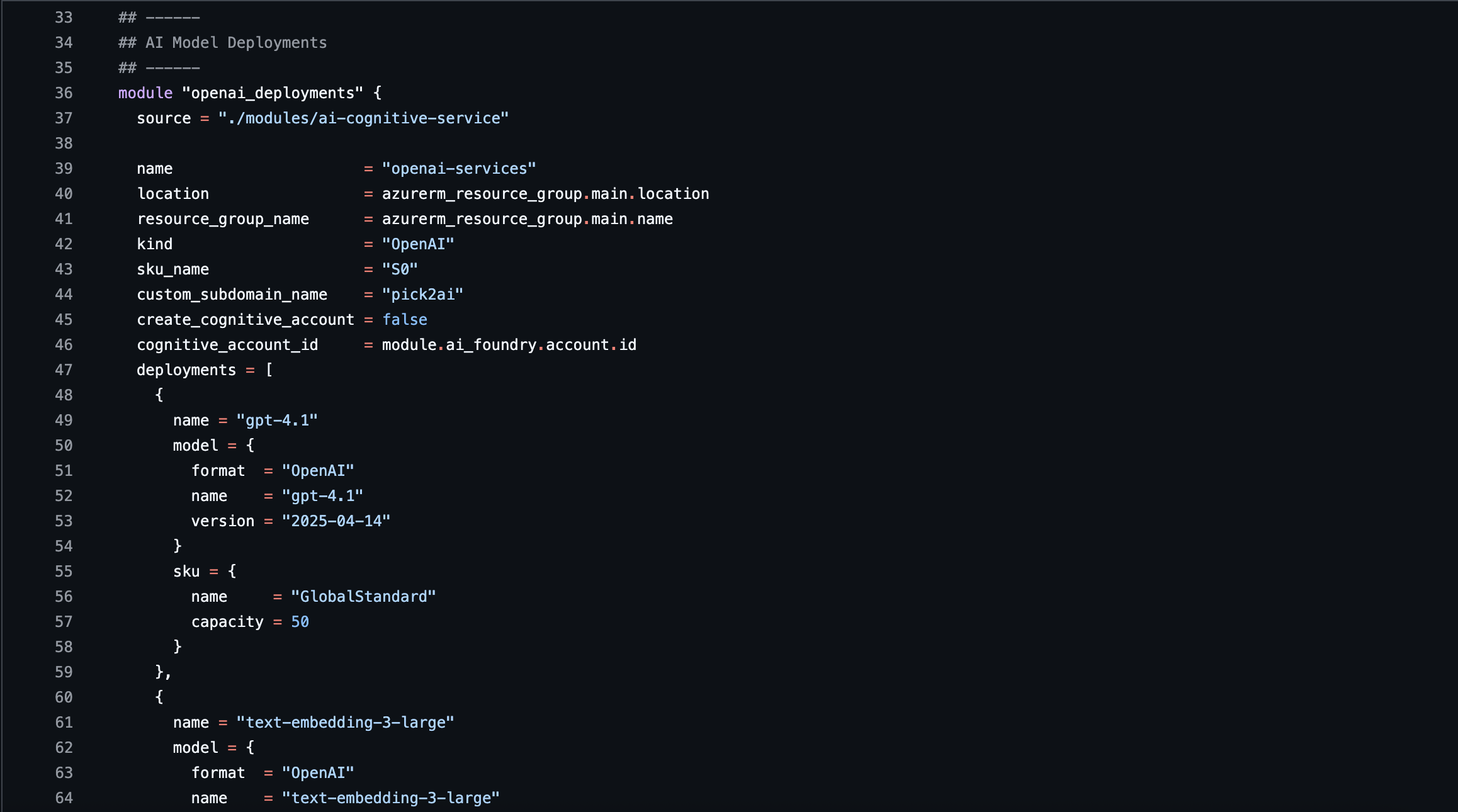

ai-cognitive-service: This module handles OpenAI and other cognitive service deployments with flexibility in mind - it can either create a new Cognitive Services account or deploy models to an existing one. It's your go-to for spinning up GPT-4, GPT-3.5, or other cognitive models with proper SKU and capacity management.

ai-agent: This module creates AI agents (assistants) by making direct REST API calls to the OpenAI-compatible endpoints, since there's no native Terraform resource for agents yet. It uses the MasterCard REST provider to manage the full lifecycle of your AI assistants with proper state tracking and metadata management. This is where the "AI that can actually do things" magic happens - perfect for building those function-calling, tool-using assistants that go beyond simple chat.

These modules leverage the AzureRM Terraform resources that exist and leverage the AZ API to fill in the gaps.

The Result: An AI Foundry Deployment with OpenAI Models & Agents In Under 200 Lines of Code

Here we see the beauty of Infrastructure as Code. Because all of the underlying complexity has been abstracted away , we can now interface with a much friendlier set of resources. This can empower your developers to not spend so much time on the infrastructure and focus on what they need to focus on: the code. It also allows us to continue to refine and refactor the underlying modules, pushing new versions as we need to, without disrupting what’s already out there.

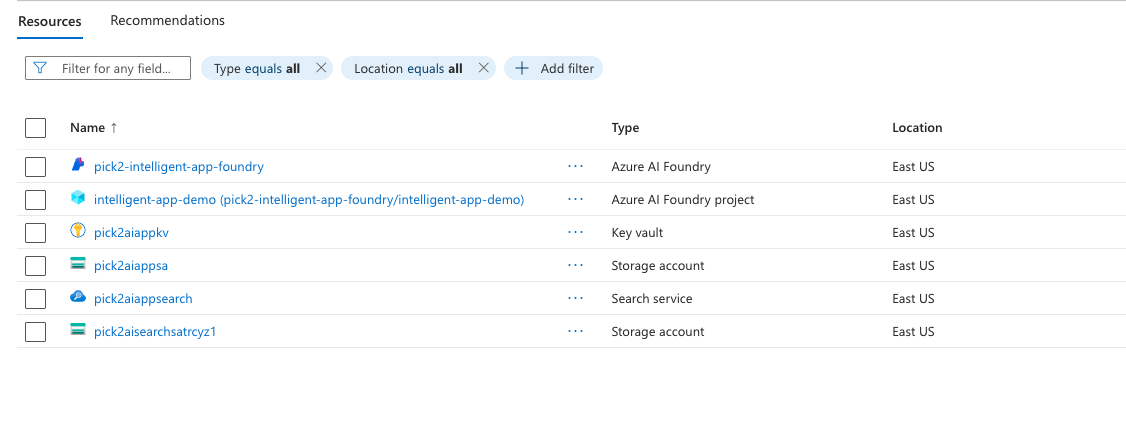

After Github CI/CD pipeline does its thing, we get the following resources created in Azure:

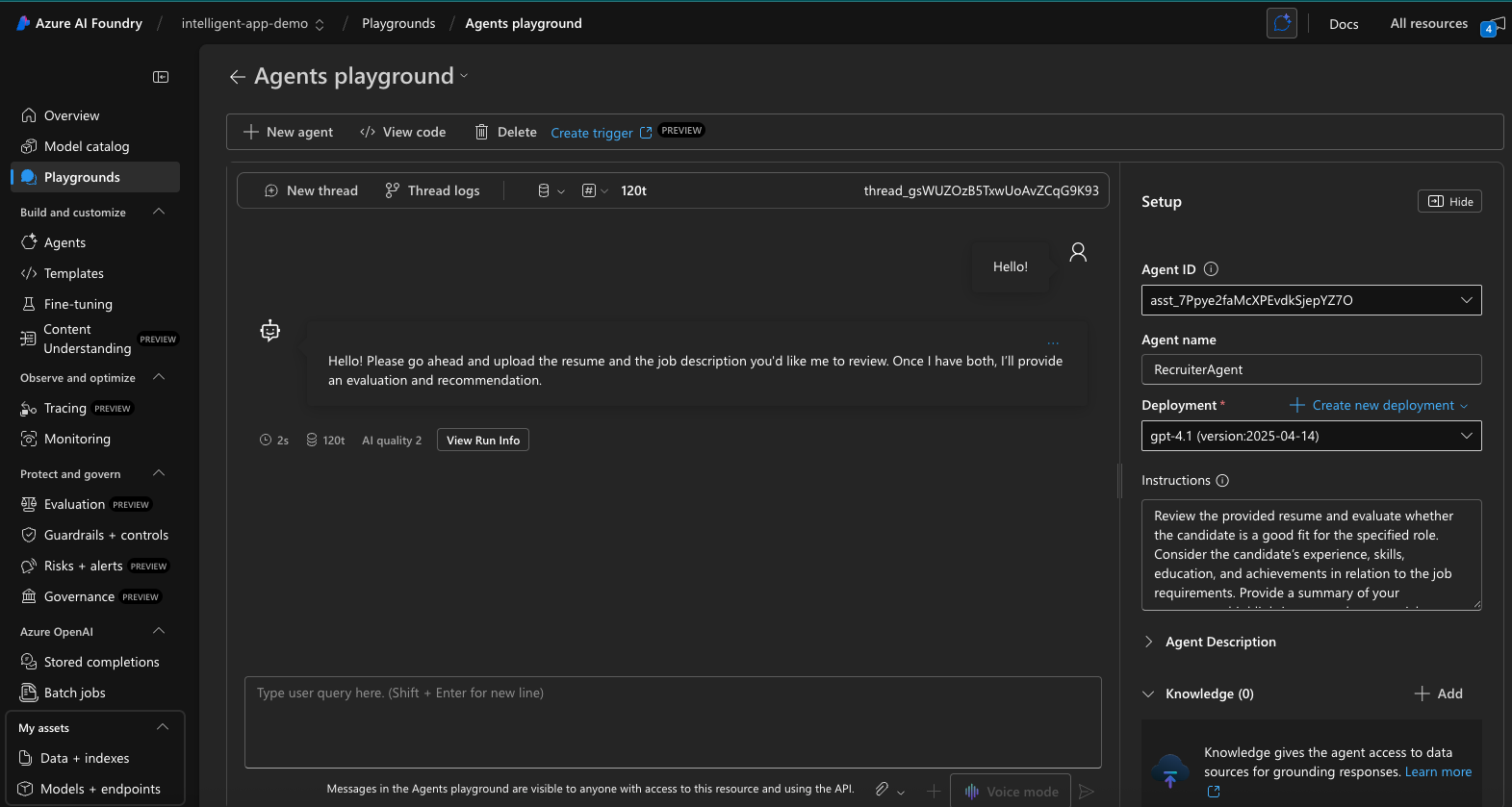

And we can start a fun little chat with our Agent!

Conclusion

Now, we haven't talked much about the other items that would make this setup truly enterprise-grade - things like private networking to keep your AI models locked down, comprehensive telemetry to track usage and performance, intelligent token capacity management to avoid those dreaded rate limit surprises, and cost optimization strategies that'll keep your CFO happy. Plus, there's the whole world of AI Search complexity with its indexers, skillsets, and data source choreography that deserves its own deep dive.

That's exactly what the next post will tackle: taking this foundation and hardening it for real-world enterprise deployment. We'll cover the networking gotchas, the monitoring setup that actually tells you useful things, and the capacity planning that prevents 3am pages about blown quotas.

But here's the thing - if you're staring at a looming AI project deadline and need this stuff figured out now, don't wait for the next blog post. I've been through all these pain points already, debugged the weird API quirks, and figured out the provider combinations that actually work. Whether you need help architecting the solution, getting your Terraform modules battle-tested, or just want someone to review your approach before you commit to production, shoot me an email or hit me up on LinkedIn.

Your AI project doesn't have to be another "we'll figure out the infrastructure later" story that turns into a six-month refactoring nightmare. Let's get it right from the start.