What You Really Want Are Intelligent Apps (Part 1)

Every week brings another LinkedIn post about another company's "AI transformation." If you're the one tasked with making this actually happen at your firm, your team is probably asking when you'll have your own AI Platform (or whether you can finally get SecOps to unblock ChatGPT). You know you need to act, but where do you start?

AI Adjacent

I've had a front-row seat to AI's evolution from academic curiosity to business necessity. Back in college, my favorite class was CSC 415 - Artificial Intelligence. We learned about the math that makes this stuff work and built Neural Networks from scratch (the kind where your laptop took three days to train a model that could barely recognize a cat). Later, when I got to Wells Fargo, I helped on a project that explored how ML could drive business insights and tighten security. Fascinating stuff, but I don’t think very much of that actually made it to production.

A few years ago, we experimented with GPT-3 for résumé parsing. The potential was obvious, the results were... let's call them "temperamental." I've seen enough brittle AI demos to know when something's ready for prime time and when it's just really good PowerPoint material.

Today is different. We're currently helping a customer build their internal AI Platform in Azure, and for the first time, this feels genuinely enterprise-ready. The "Intelligent App" pattern has emerged as the sweet spot—practical, scalable, and surprisingly robust.

This series will walk you through building an AI strategy that actually works: thinking through use cases strategically, making smart infrastructure decisions, and ensuring you can see what's happening under the hood. Because nothing says "failed AI initiative" like a black box burning through your cloud budget.

The Three Roadblocks Every Leader Hits

When executives talk about "getting into AI," the problems usually aren't technical first, they're strategic. Here are the three roadblocks I’ve seen:

1) What should we actually build?

Everyone says they need "an AI strategy," but few can articulate clear ROI. The temptation is to chase flashy demos or copy whatever your competitor just announced on LinkedIn.

The real opportunity? **Intelligent Apps**—practical solutions that marry your company's data with modern AI to solve specific, high-value problems. Think less "skynet," more "that thing that finally makes our knowledge base useful."

2) How do we ensure today’s innovation isn’t tomorrow’s technical debt?

Most AI projects start as weekend vibe-coding hackathons that somehow made it to production. They demo beautifully but crumble under real load, or become unmaintainable the moment their creator takes a job at a startup.

The fix is treating AI like any other enterprise workload: infrastructure-as-code, proper CI/CD, monitoring that doesn't require a PhD to interpret. Build it once, deploy it everywhere, sleep soundly at night.

3) How do we run this thing responsibly?

Shipping version 1.0 isn't the finish line—it's mile marker one. Your boss wants answers to the questions that keep them up at night:

What are people actually asking this thing?

How is it responding, and are those responses… appropriate?

What’s this thing costing us?

Can we track changes and prevent model drift (it feels like OpenAI is pumping out a new one of these every week)?

Without proper monitoring and governance, AI becomes an expensive black box. The companies that win treat AI platforms like any other mission-critical system: observable, cost-controlled, and continuously improved.

So, What Should You Build?

For most organizations, the highest ROI comes from an Intelligent App that:

Works with your existing data — pulling from internal systems, documents, and that knowledge bases.

Delivers insights through familiar interfaces — typically chat or search experiences that don't require training your users on yet another tool, but maintains your company’s branding.

Scales across use cases — once you nail the baseline architecture, you can expand to customer support, employee productivity, or decision support without starting from scratch.

Think of this as your "maximum bang for buck" use case: a way to make your organization's data genuinely useful by pairing it with modern AI models wrapped in interfaces your teams already understand.

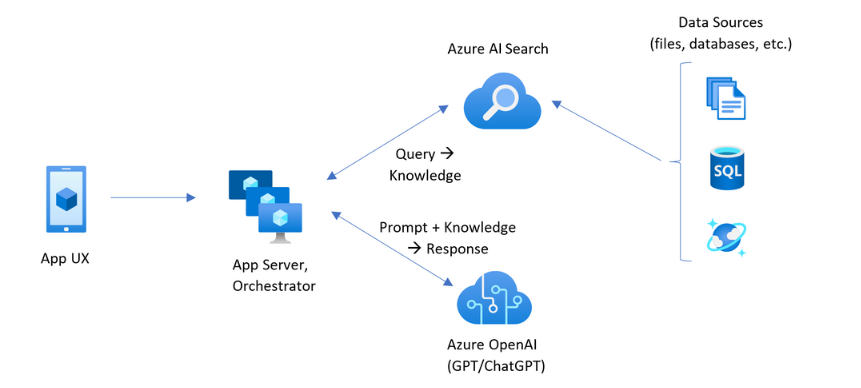

We'll introduce a reference architecture that shows how your data, Azure AI Search, and Azure OpenAI come together inside Azure AI Foundry. The key insight: we're not building a cool demo—we're building an Enterprise AI baseline that you can manage, secure, and extend with confidence.

And because we're standing it up with Infrastructure as Code, it's not a one-off science project. It's a repeatable, auditable foundation that can grow with your ambitions (and survive your next org chart shuffle).

Here’s Microsoft’s reference architecture. We’ll be using it as a guideline throughout this series:

Microsoft RAG Reference Architecture

What’s Next

We've covered the real challenge: cutting through the AI hype, choosing the right first use case, and focusing on Intelligent Apps that deliver measurable ROI. More importantly, we've outlined how this first step creates a repeatable, enterprise-ready baseline for AI across your organization.

In Part 2, we'll get our hands dirty with the actual implementation:

The Terraform modules the team built (and battle-tested) to support our customer’s Intelligent App journey.

How to leverage CI/CD pipelines to spin up the foundation quickly and consistently.

The enterprise features that separate real platforms from expensive demos—monitoring, alerting, and cost analytics that keep your AI investment observable and manageable.

If you’re interested in chatting through how we can set this up for your organization, feel free to email us at info@pick2solutions.com.